Prompt engineering best practices

In the following list, we outline additional best practices to optimize and enhance your experience with prompt creation:

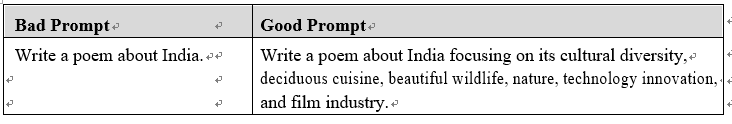

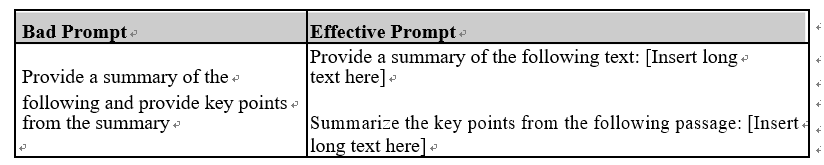

- Clarity and precision for accurate responses: Ensure that prompts are clear, concise, and specific, avoiding ambiguity or multiple interpretations:

Figure 5.12 – Best practice: clarity and precision

• Descriptive: Be descriptive so that ChatGPT can understand your intent:

Figure 5.13 – Best practice: be descriptive

- Format the output: Mention the format of the output, which can be bullet points, paragraphs, sentences, tables, and languages, such as XML, HTML, and JSON. Use examples to articulate the desired output.

- Adjust the Temperature and Top_p parameters for creativity: As indicated in the parameters section, modifying the Temperatures and Top_p can significantly influence the variability of the model’s output. In scenarios that call for creativity and imagination, raising the temperature proves beneficial. On the other hand, when dealing with legal applications that demand a reduction in hallucinations, a lower temperature becomes advantageous.

- Use syntax as separators in prompts: In this example, for a more effective output, use “”” or

### to separate instruction and input data:

Example:

Convert the text below to Spanish

Text: “””

{text input here}

“””

- Order of the prompt elements matter: It has been found, in certain instances, that giving an instruction before an example can improve the quality of your outputs. Additionally, the order of examples can affect the output of prompts.

- Use guiding words: Thishelps steer the model toward a specific structure, such as the text highlighted in the following:

Example:

#Create a basic Python function that

#1. Requests the user to enter a temperature in Celsius

#2. Converts the Celsius temperature to Fahrenheit def ctf():

- Instead of saying what not to provide, give alternative recommendations: Provide an alternative path if ChatGPT is unable to perform a task, such as in the following highlighted message:

Example:

System Message: You are an AI nutrition consultant that provides nutrition consultation based on health and wellness goals of the customer Please note that any questions or inquiries beyond the scope of nutrition consultation will NOT be answered and instead will receive the response: “Sorry! This question falls outside my domain of expertise!”

Customer: How do I invest in 401K?

Nutrition AI Assistant: “Sorry! This question falls outside my domain of expertise!”

- Provide example-based prompts: This helps the language model learn from specific instances and patterns. Start with a zero-shot, then a few-shot, and if neither of them works, then fine-tune the model.

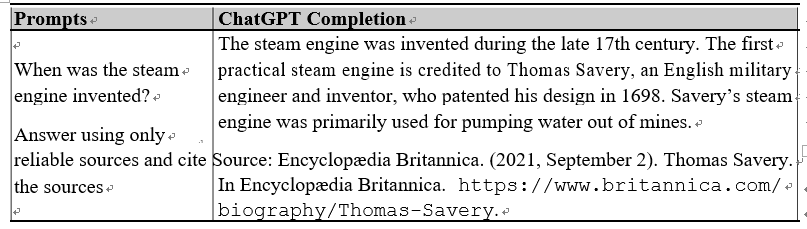

- Ask ChatGPT to provide citations/sources: When asking ChatGPT to provide information, you can ask it to answer only using reliable sources and to cite the sources:

Figure 5.14 – Best practice: provide citations

- Break down a complex task into simpler tasks: See the following example:

Figure 5.15 – Best practice: break down a complex task